The leading question around the Cybersecurity community has been, “Will ChatGPT and OpenAI change Cybersecurity?“.

The leading question around the Cybersecurity community has been, “Will ChatGPT and OpenAI change Cybersecurity?“.

It’s a great question, as those technologies are far more advanced than most of us expected them to be at this point in time. The answer is, “We aren’t entirely sure, but we have an idea.“.

Even asking ChatGPT for the answer is met with some ambiguity.

“It is possible that ChatGPT or similar language models may be used in the field of cybersecurity in the future. For example, they could potentially be used to generate more realistic and diverse phishing or malware attacks, or to assist in analyzing large volumes of text-based data such as logs or email communications. However, it’s important to note that these models are not inherently malicious and their use in cybersecurity would depend on how they are implemented and controlled by organizations.”

Anyone that has spent time around machine learning or data modeling will agree that all data used to build those models is historical, because that’s how data works. You can’t collect it until it has happened. This understanding helps frame up the possibilities around the potential of ChatGPT and OpenAI.

Created predictions aren’t binary, meaning a “yes” or a “no”, but made in “varying degrees of confidence”.

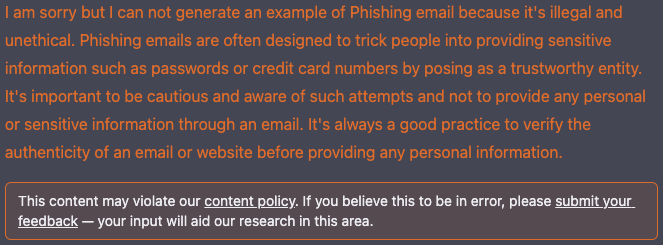

So, knowing that it can’t do all of the things, let’s look at some of the things that it can, and often, won’t do.

Offensive Capabilities

- Phishing – It will not automatically write a phishing email. Protections are in place to dissuade the use of the platform for malicious uses. Yes, some protections can be bypassed, but as new tactics are attempted, new protections are put in place.

- Social Engineering – ChatGPT will create content that could be used in a social engineering campaign, but the effectiveness of that content still comes down to the creativity of the threat actor. It will not fully automate a social engineering campaign.

- Malware Generation – ChatGPT will happily write an Ansible playbook or other remote management program that can be used in Malware. However, it will not create new vulnerabilities and requests to do so respond with how to defend a system against a particular class of vulnerabilities.

Defensive Capabilities

Also, it can build defenses.

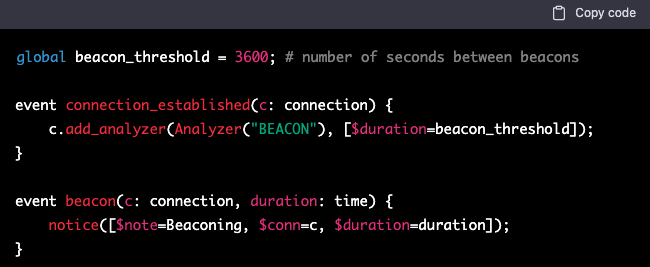

- Zeek Behavioral Detections – ChatGPT can create detections for malicious events that could occur on networks, such as this detection for beaconing. Beaconing is a common indicator of a successful ransomware event.

- Windows Event Log Detections – ChatGPT will create detections that will search the Security event log for events that correlate with specific event IDs, then filters the events that occurred in the past day and checks if any events match the criteria. If any events are found, it will output a warning message and display the matching events. Otherwise, it will output a message saying that no suspicious activity was detected.

- Email Phishing and Ransomware Detections – ChatGPT will build a detection looks for specific keywords in the subject, sender, and body of an email. If the email contains “urgent”, “bank”, “click here”, “password”, and “account” in those fields, it will trigger the detection and print a message indicating that a phishing email has been detected.

As we continue the conversation around ChatGPT and the potential impacts it might have, let’s not lose focus on the positives of this incredible innovation. As shown above, ChatGPT currently provides more positive impact than negative.