Léargas Security, like many other Extended Detection and Response (XDR) platforms, has become an essential part of modern cybersecurity. As the number and complexity of cyber threats continue to increase, more organizations are turning to Léargas to provide comprehensive and proactive threat detection and response capabilities. And one of the most significant advancements in the Léargas platform in recent years has been the integration of artificial intelligence (AI) and machine learning (ML) algorithms.

Let’s understand what Léargas is. Léargas is an advanced security platform that provides organizations with a comprehensive approach to threat detection and response. Unlike traditional security solutions that only focus on specific parts of an organization’s infrastructure, the Léargas platform leverages data from multiple security tools and data sources, both on-premises and in the cloud, to provide a more holistic and comprehensive view of the network, endpoints, and cloud environments. The Léargas platform combines security analytics, threat intelligence, and automated response capabilities to detect and respond to threats across the entire infrastructure.

With the integration of artificial intelligence and machine learning, Léargas can improve the detection capabilities and speed up response times for its subscribers. Artificial intelligence and machine learning algorithms can process copious amounts of data from a growing number of sources in real-time, identifying patterns and anomalies that may indicate an attack. This allows the Léargas platform to detect and respond to threats more quickly, reducing the risk of damage and data loss.

So, here are some specific ways that the Léargas platform utilizes AI and ML:

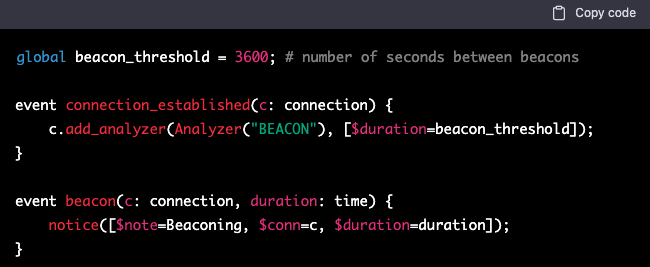

- Enhanced detection capabilities: AI and ML algorithms can analyze large volumes of data from various sources, such as network traffic, logs, and endpoints. This enables Léargas to detect advanced and emerging threats that traditional security solutions may miss.

- Faster response times: AI and ML algorithms can automate response actions, such as isolating infected endpoints, blocking malicious traffic, and containing the attack. This permits Léargas to respond quickly to threats, reducing the time-to-detection and time-to-response.

- Reduced false positives: AI and ML algorithms can filter out false positives, reducing the number of alerts that security teams need to investigate. This saves time and resources, allowing security teams, both MSP (Managed Service Providers), MSSP, and independent organizations to focus on more critical threats.

- Improved threat intelligence: AI and ML algorithms can analyze threat intelligence data, identifying new patterns and trends that may indicate emerging threats. This enables Léargas to stay ahead of the threat landscape, providing proactive threat detection and response capabilities. Additionally, Léargas partners with companies like Critical Path Security to gain more valuable intelligence each day.

- Better risk management: AI and ML algorithms can provide risk scoring and prioritization, allowing security teams to focus on the most critical threats.

- Malware analysis: Machine learning algorithms are used by the Léargas platform and the supported EDR (Endpoint Detection and Response) solutions to analyze malware behavior, identifying patterns that may indicate the presence of malware, ransomware, or an internal threat actor on a network.

Léargas strives to secure organizations by enhancing threat detection capabilities, speeding up response times, reducing false positives, improving threat intelligence, and providing better risk management.

As the threat landscape continues to evolve, organizations need advanced security solutions that can keep pace with the changing threat landscape. The AI-powered Léargas security platform provides a proactive and comprehensive approach to cybersecurity, helping organizations to stay one step ahead of cyber threats.

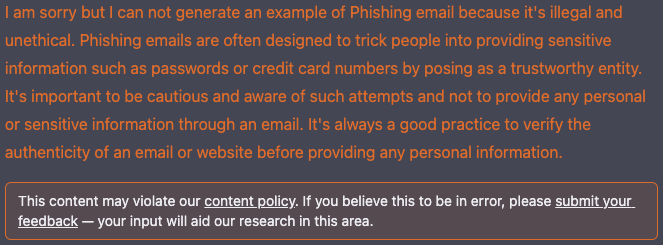

The leading question around the Cybersecurity community has been, “Will ChatGPT and OpenAI change Cybersecurity?“.

The leading question around the Cybersecurity community has been, “Will ChatGPT and OpenAI change Cybersecurity?“.